Introduction

Within the fast-evolving world of AI, it’s essential to maintain monitor of your API prices, particularly when constructing LLM-based purposes comparable to Retrieval-Augmented Technology (RAG) pipelines in manufacturing. Experimenting with completely different LLMs to get the most effective outcomes typically entails making quite a few API requests to the server, every request incurring a value. Understanding and monitoring the place each greenback is spent is important to managing these bills successfully.

On this article, we are going to implement LLM observability with RAG utilizing simply 10-12 strains of code. Observability helps us monitor key metrics comparable to latency, the variety of tokens, prompts, and the price per request.

Studying Targets

- Perceive the Idea of LLM Observability and the way it helps in monitoring and optimizing the efficiency and value of LLMs in purposes.

- Discover completely different key metrics to trace and monitor comparable to token utilisation, latency, value per request, and immediate experimentations.

- The way to construct Retrieval Augmented Technology pipeline together with Observability.

- The way to use BeyondLLM to additional consider the RAG pipeline utilizing RAG triad metrics i.e., Context relevancy, Reply relevancy and Groundedness.

- Properly adjusting chunk measurement and top-Ok values to scale back prices, use environment friendly variety of tokens and enhance latency.

This text was printed as part of the Information Science Blogathon.

What’s LLM Observability?

Consider LLM Observability identical to you monitor your automobile’s efficiency or monitor your every day bills, LLM Observability entails watching and understanding each element of how these AI fashions function. It helps you monitor utilization by counting variety of “tokens”—items of processing that every request to the mannequin makes use of. This helps you keep inside price range and keep away from surprising bills.

Moreover, it screens efficiency by logging how lengthy every request takes, guaranteeing that no a part of the method is unnecessarily gradual. It supplies useful insights by exhibiting patterns and tendencies, serving to you establish inefficiencies and areas the place you is perhaps overspending. LLM Observability is a finest follow to comply with whereas constructing purposes on manufacturing, as this will automate the motion pipeline to ship alerts if one thing goes unsuitable.

What’s Retrieval Augmented Technology?

Retrieval Augmented Technology (RAG) is an idea the place related doc chunks are returned to a Giant Language Mannequin (LLM) as in-context studying (i.e., few-shot prompting) primarily based on a consumer’s question. Merely put, RAG consists of two components: the retriever and the generator.

When a consumer enters a question, it’s first transformed into embeddings. These question embeddings are then searched in a vector database by the retriever to return probably the most related or semantically related paperwork. These paperwork are handed as in-context studying to the generator mannequin, permitting the LLM to generate an inexpensive response. RAG reduces the chance of hallucinations and supplies domain-specific responses primarily based on the given information base.

Constructing a RAG pipeline entails a number of key parts: information supply, textual content splitters, vector database, embedding fashions, and enormous language fashions. RAG is extensively applied when it’s good to join a big language mannequin to a customized information supply. For instance, if you wish to create your personal ChatGPT in your class notes, RAG could be the best answer. This strategy ensures that the mannequin can present correct and related responses primarily based in your particular information, making it extremely helpful for customized purposes.

Why use Observability with RAG?

Constructing RAG utility depends upon completely different use circumstances. Every use case relies upon its personal customized prompts for in-context studying. Customized prompts consists of mixture of each system immediate and consumer immediate, system immediate is the principles or directions primarily based on which LLM must behave and consumer immediate is the augmented immediate to the consumer question. Writing a very good immediate is first try is a really uncommon case.

Utilizing observability with Retrieval Augmented Technology (RAG) is essential for guaranteeing environment friendly and cost-effective operations. Observability helps you monitor and perceive each element of your RAG pipeline, from monitoring token utilization to measuring latency, prompts and response occasions. By conserving an in depth watch on these metrics, you may establish and handle inefficiencies, keep away from surprising bills, and optimize your system’s efficiency. Primarily, observability supplies the insights wanted to fine-tune your RAG setup, guaranteeing it runs easily, stays inside price range, and persistently delivers correct, domain-specific responses.

Let’s take a sensible instance and perceive why we have to use observability whereas utilizing RAG. Suppose you constructed the app and now its on manufacturing

Chat with YouTube: Observability with RAG Implementation

Allow us to now look into the steps of Observability with RAG Implementation.

Step1: Set up

Earlier than we proceed with the code implementation, it’s good to set up a couple of libraries. These libraries embrace Past LLM, OpenAI, Phoenix, and YouTube Transcript API. Past LLM is a library that helps you construct superior RAG purposes effectively, incorporating observability, fine-tuning, embeddings, and mannequin analysis.

pip set up beyondllm

pip set up openai

pip set up arize-phoenix[evals]

pip set up youtube_transcript_api llama-index-readers-youtube-transcriptStep2: Setup OpenAI API Key

Arrange the surroundings variable for the OpenAI API key, which is important to authenticate and entry OpenAI’s companies comparable to LLM and embedding.

import os, getpass

os.environ['OPENAI_API_KEY'] = getpass.getpass("API:")

# import required libraries

from beyondllm import supply,retrieve,generator, llms, embeddings

from beyondllm.observe import ObserverStep3: Setup Observability

Enabling observability needs to be step one in your code to make sure all subsequent operations are tracked.

Observe = Observer()

Observe.run()Step4: Outline LLM and Embedding

Because the OpenAI API secret’s already saved in surroundings variable, now you can outline the LLM and embedding mannequin to retrieve the doc and generate the response accordingly.

llm=llms.ChatOpenAIModel()

embed_model = embeddings.OpenAIEmbeddings()Step5: RAG Half-1-Retriever

BeyondLLM is a local framework for Information Scientists. To ingest information, you may outline the information supply contained in the `match` perform. Primarily based on the information supply, you may specify the `dtype` in our case, it’s YouTube. Moreover, we are able to chunk our information to keep away from the context size problems with the mannequin and return solely the particular chunk. Chunk overlap defines the variety of tokens that should be repeated within the consecutive chunk.

The Auto retriever in BeyondLLM helps retrieve the related ok variety of paperwork primarily based on the kind. There are numerous retriever sorts comparable to Hybrid, Re-ranking, Flag embedding re-rankers, and extra. On this use case, we are going to use a traditional retriever, i.e., an in-memory retriever.

information = supply.match("https://www.youtube.com/watch?v=IhawEdplzkI",

dtype="youtube",

chunk_size=512,

chunk_overlap=50)

retriever = retrieve.auto_retriever(information,

embed_model,

sort="normal",

top_k=4)

Step6: RAG Half-2-Generator

The generator mannequin combines the consumer question and the related paperwork from the retriever class and passes them to the Giant Language Mannequin. To facilitate this, BeyondLLM helps a generator module that chains up this pipeline, permitting for additional analysis of the pipeline on the RAG triad.

user_query = "summarize simple task execution worflow?"

pipeline = generator.Generate(query=user_query,retriever=retriever,llm=llm)

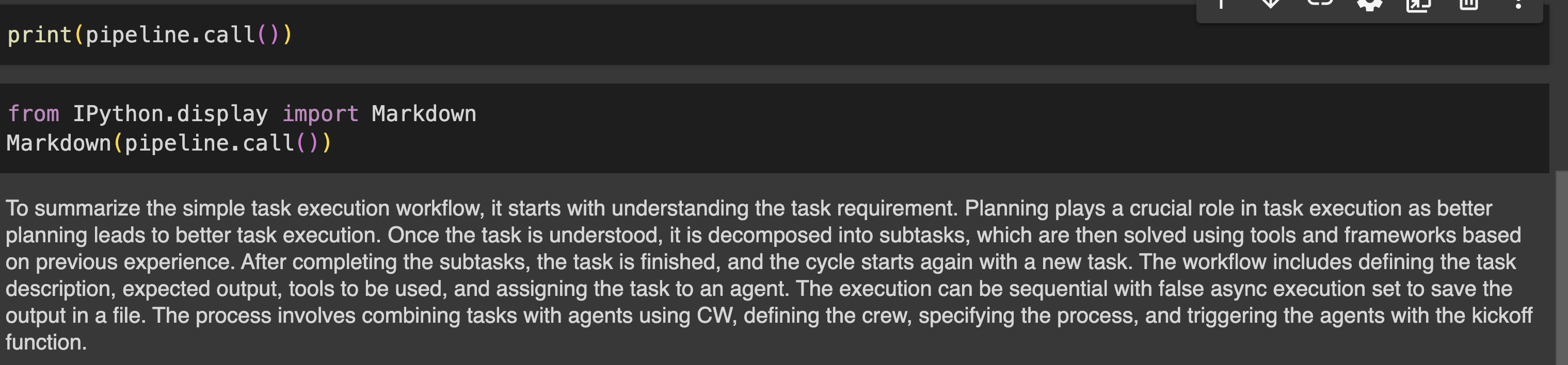

print(pipeline.name())Output

Step7: Consider the Pipeline

Analysis of RAG pipeline could be carried out utilizing RAG triad metrics that features Context relevancy, Reply relevancy and Groundness.

- Context relevancy : Measures the relevance of the chunks retrieved by the auto_retriever in relation to the consumer’s question. Determines the effectivity of the auto_retriever in fetching contextually related data, guaranteeing that the inspiration for producing responses is stable.

- Reply relevancy : Evaluates the relevance of the LLM’s response to the consumer question.

- Groundedness : It determines how nicely the language mannequin’s responses are grounded within the data retrieved by the auto_retriever, aiming to establish and get rid of any hallucinated content material. This ensures that the outputs are primarily based on correct and factual data.

print(pipeline.get_rag_triad_evals())

#or

# run it individually

print(pipeline.get_context_relevancy()) # context relevancy

print(pipeline.get_answer_relevancy()) # reply relevancy

print(pipeline.get_groundedness()) # groundednessOutput:

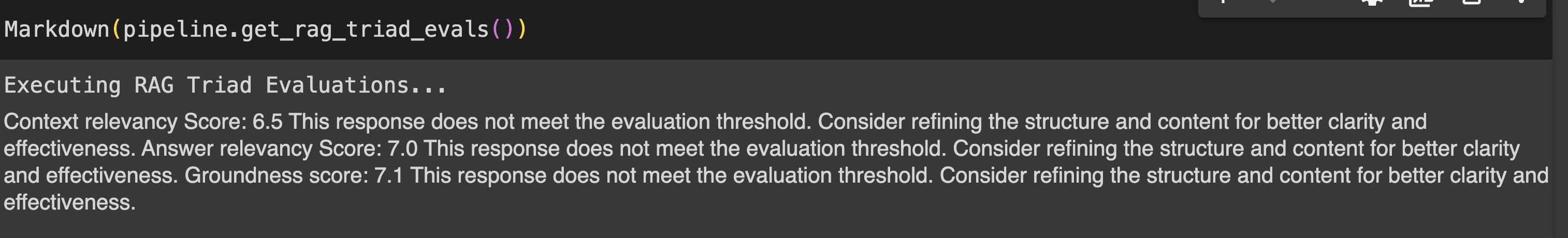

Phoenix Dashboard: LLM Observability Evaluation

Determine-1 denotes the principle dashboard of the Phoenix, when you run the Observer.run(), it returns two hyperlinks:

- Localhost: http://127.0.0.1:6006/

- If localhost will not be working, you may select, another hyperlink to view the Phoenix app in your browser.

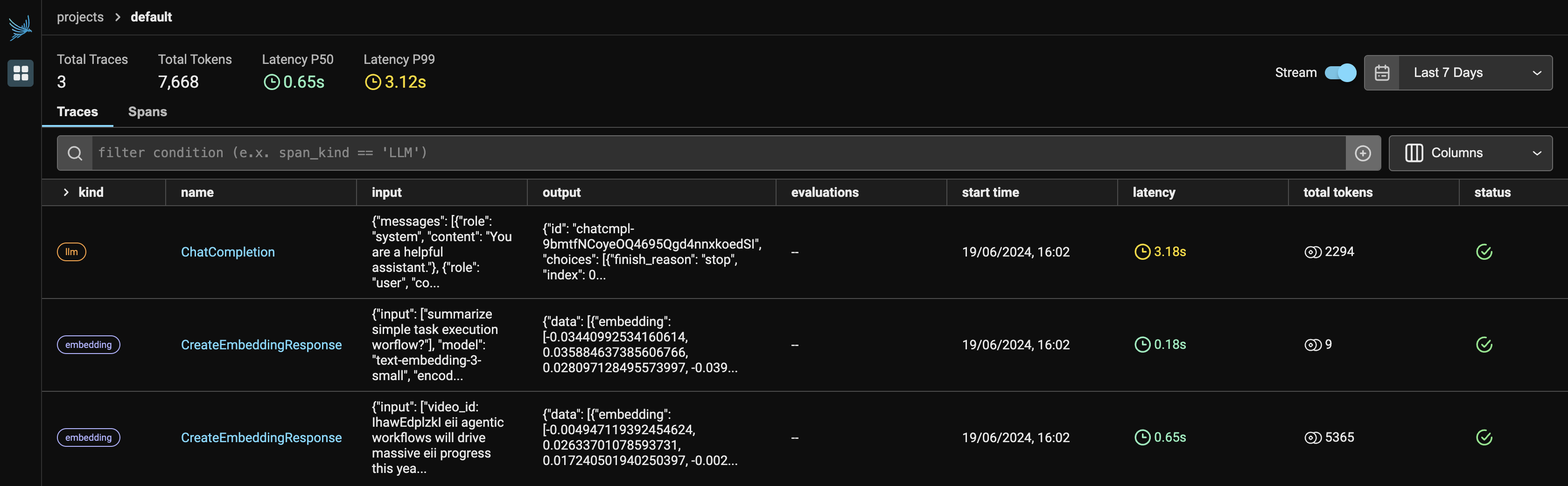

Since we’re utilizing two companies from OpenAI, it is going to show each LLM and embeddings underneath the supplier. It should present the variety of tokens every supplier utilized, together with the latency, begin time, enter given to the API request, and the output generated from the LLM.

Determine 2 reveals the hint particulars of the LLM. It consists of latency, which is 1.53 seconds, the variety of tokens, which is 2212, and knowledge such because the system immediate, consumer immediate, and response.

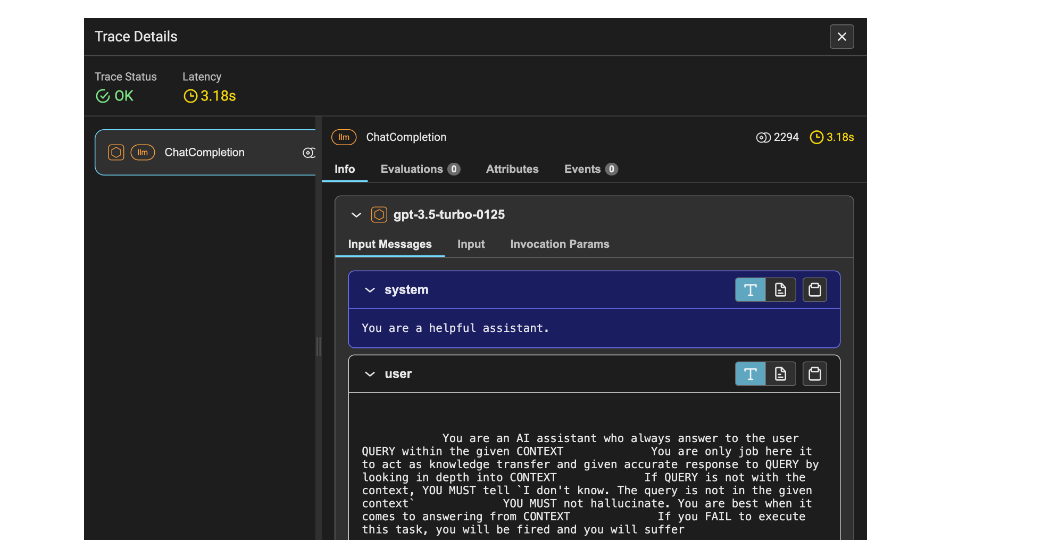

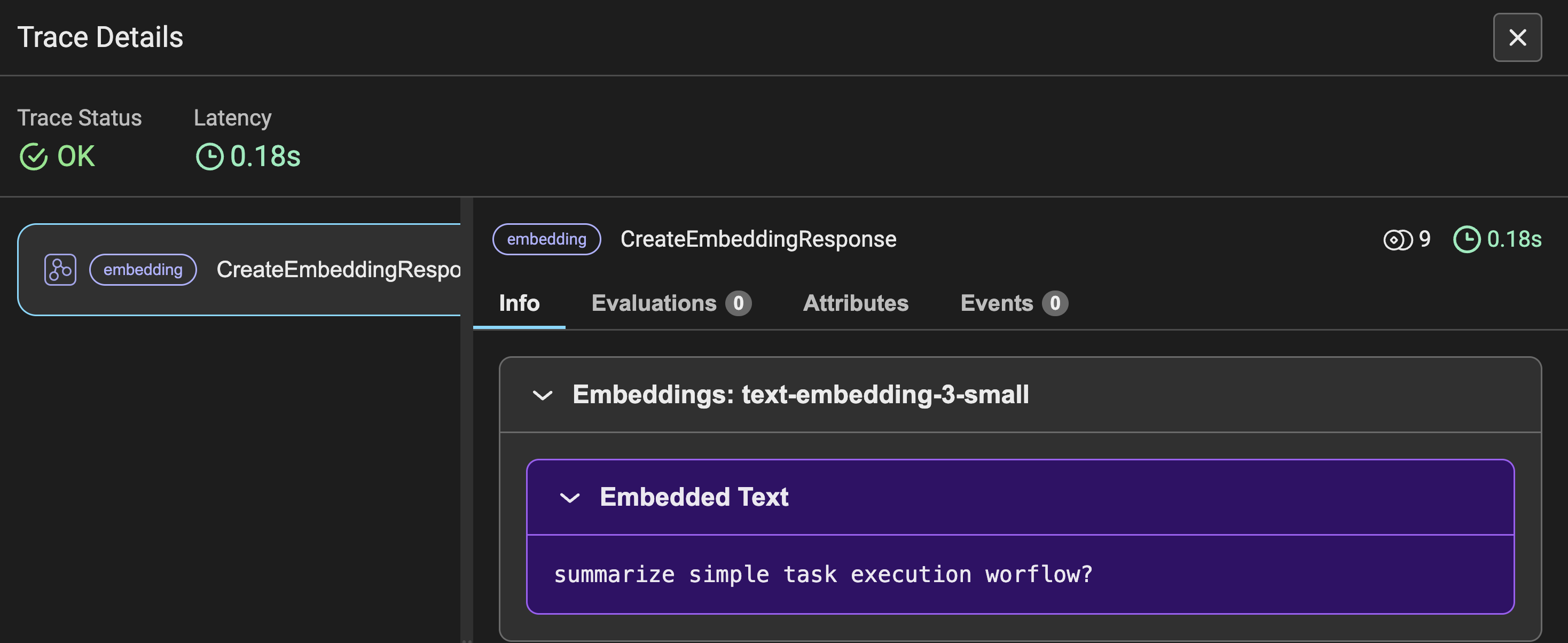

Determine-3 reveals the hint particulars of the Embeddings for the consumer question requested, together with different metrics just like Determine-2. As an alternative of prompting, you see the enter question transformed into embeddings.

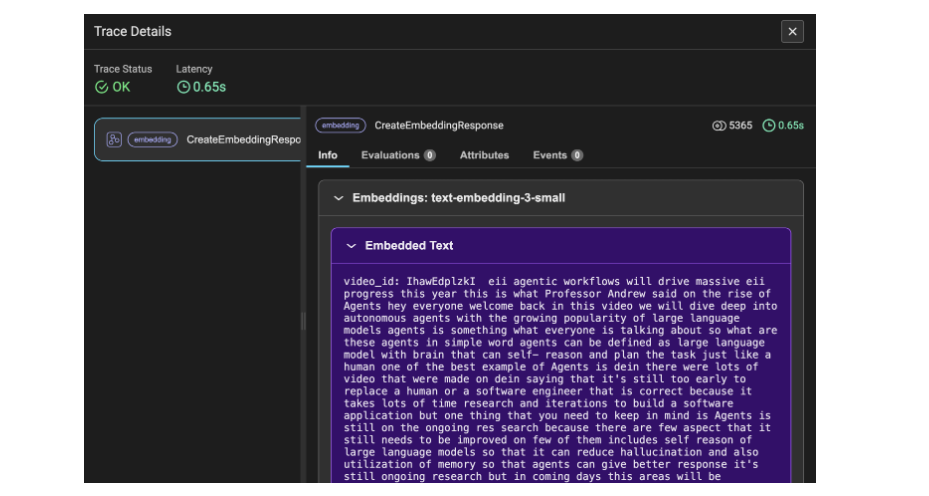

Determine 4 reveals the hint particulars of the embeddings for the YouTube transcript information. Right here, the information is transformed into chunks after which into embeddings, which is why the utilized tokens quantity to 5365. This hint element denotes the transcript video information as the knowledge.

Conclusion

To summarize, you’ve gotten efficiently constructed a Retrieval Augmented Technology (RAG) pipeline together with superior ideas comparable to analysis and observability. With this strategy, you may additional use this studying to automate and write scripts for alerts if one thing goes unsuitable, or use the requests to hint the logging particulars to get higher insights into how the applying is performing, and, after all, preserve the price inside the price range. Moreover, incorporating observability helps you optimize mannequin utilization and ensures environment friendly, cost-effective efficiency in your particular wants.

Key Takeaways

- Understanding the necessity of Observability whereas constructing LLM primarily based utility comparable to Retrieval Augmented era.

- Key metrics to hint comparable to Variety of tokens, Latency, prompts, and prices for every API request made.

- Implementation of RAG and triad evaluations utilizing BeyondLLM with minimal strains of code.

- Monitoring and monitoring LLM observability utilizing BeyondLLM and Phoenix.

- Few snapshots insights on hint particulars of LLM and embeddings that must be automated to enhance the efficiency of utility.

Ceaselessly Requested Questions

A. On the subject of observability, it’s helpful to trace closed-source fashions like GPT, Gemini, Claude, and others. Phoenix helps direct integrations with Langchain, LLamaIndex, and the DSPY framework, in addition to unbiased LLM suppliers comparable to OpenAI, Bedrock, and others.

A. BeyondLLM helps evaluating the Retrieval Augmented Technology (RAG) pipeline utilizing the LLMs it helps. You possibly can simply consider RAG on BeyondLLM with Ollama and HuggingFace fashions. The analysis metrics embrace context relevancy, reply relevancy, groundedness, and floor reality.

A. OpenAI API value is spent on the variety of tokens you utilise. That is the place observability may also help you retain monitoring and hint of Tokens per request, General tokens, Prices per request, latency. This metrics actually assist to set off a perform to alert the price to the consumer.

The media proven on this article will not be owned by Analytics Vidhya and is used on the Writer’s discretion.