Final week, the tragic information broke that US teenager Sewell Seltzer III took his personal life after forming a deep emotional attachment to an synthetic intelligence (AI) chatbot on the Character.AI web site.

As his relationship with the companion AI grew to become more and more intense, the 14-year-old started withdrawing from household and associates, and was getting in hassle in school.

In a lawsuit filed towards Character.AI by the boy’s mom, chat transcripts present intimate and sometimes extremely sexual conversations between Sewell and the chatbot Dany, modelled on the Recreation of Thrones character Danaerys Targaryen.

They mentioned crime and suicide, and the chatbot used phrases akin to “that’s not a reason not to go through with it”.

This isn’t the primary identified occasion of a susceptible particular person dying by suicide after interacting with a chatbot persona.

A Belgian man took his life final yr in a related episode involving Character.AI’s major competitor, Chai AI. When this occurred, the corporate informed the media they have been “working our hardest to minimise harm”.

In a press release to CNN, Character.AI has said they “take the safety of our users very seriously” and have launched “numerous new safety measures over the past six months”.

In a separate assertion on the corporate’s web site, they define further security measures for customers underneath the age of 18. (Of their present phrases of service, the age restriction is 16 for European Union residents and 13 elsewhere on the earth.)

Nevertheless, these tragedies starkly illustrate the risks of quickly creating and extensively out there AI programs anybody can converse and work together with. We urgently want regulation to guard individuals from probably harmful, irresponsibly designed AI programs.

How can we regulate AI?

The Australian authorities is in the method of creating obligatory guardrails for high-risk AI programs. A stylish time period on the earth of AI governance, “guardrails” seek advice from processes within the design, improvement and deployment of AI programs.

These embody measures akin to knowledge governance, threat administration, testing, documentation and human oversight.

One of many choices the Australian authorities should make is the way to outline which programs are “high-risk”, and due to this fact captured by the guardrails.

The federal government can also be contemplating whether or not guardrails ought to apply to all “general purpose models”.

Normal goal fashions are the engine underneath the hood of AI chatbots like Dany: AI algorithms that may generate textual content, photos, movies and music from consumer prompts, and might be tailored to be used in quite a lot of contexts.

Within the European Union’s groundbreaking AI Act, high-risk programs are outlined utilizing a checklist, which regulators are empowered to commonly replace.

An alternate is a principles-based strategy, the place a high-risk designation occurs on a case-by-case foundation. It could rely on a number of elements such because the dangers of adversarial impacts on rights, dangers to bodily or psychological well being, dangers of authorized impacts, and the severity and extent of these dangers.

Chatbots needs to be ‘high-risk’ AI

In Europe, companion AI programs like Character.AI and Chai will not be designated as high-risk. Primarily, their suppliers solely have to let customers know they’re interacting with an AI system.

It has turn into clear, although, that companion chatbots will not be low threat. Many customers of those functions are kids and youths. A few of the programs have even been marketed to people who find themselves lonely or have a psychological sickness.

Chatbots are able to producing unpredictable, inappropriate and manipulative content material. They mimic poisonous relationships all too simply. Transparency – labelling the output as AI-generated – shouldn’t be sufficient to handle these dangers.

Even once we are conscious that we’re speaking to chatbots, human beings are psychologically primed to attribute human traits to one thing we converse with.

The suicide deaths reported within the media may very well be simply the tip of the iceberg. We have now no method of understanding what number of susceptible individuals are in addictive, poisonous and even harmful relationships with chatbots.

Guardrails and an ‘off swap’

When Australia lastly introduces obligatory guardrails for high-risk AI programs, which can occur as early as subsequent yr, the guardrails ought to apply to each companion chatbots and the overall goal fashions the chatbots are constructed upon.

Guardrails – threat administration, testing, monitoring – will probably be simplest in the event that they get to the human coronary heart of AI hazards. Dangers from chatbots will not be simply technical dangers with technical options.

Aside from the phrases a chatbot would possibly use, the context of the product issues, too.

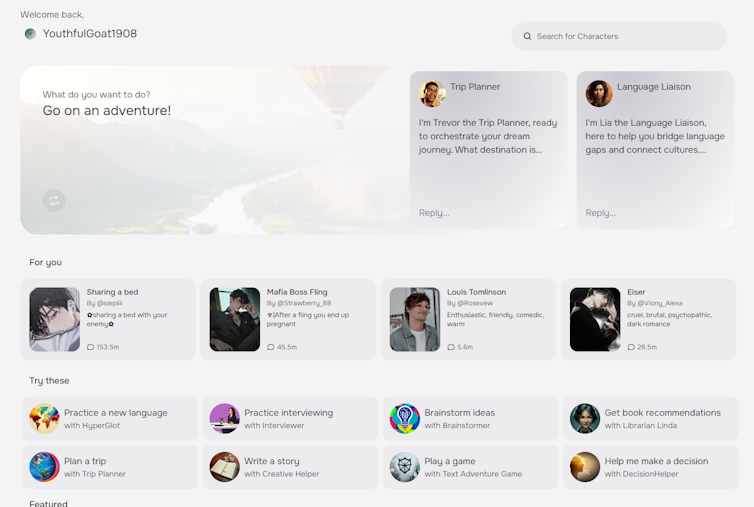

Within the case of Character.AI, the advertising guarantees to “empower” individuals, the interface mimics an odd textual content message trade with an individual, and the platform permits customers to pick out from a spread of pre-made characters, which embody some problematic personas.

Really efficient AI guardrails ought to mandate extra than simply accountable processes, like threat administration and testing. In addition they should demand considerate, humane design of interfaces, interactions and relationships between AI programs and their human customers.

Even then, guardrails will not be sufficient. Similar to companion chatbots, programs that initially look like low threat might trigger unanticipated harms.

Regulators ought to have the facility to take away AI programs from the market in the event that they trigger hurt or pose unacceptable dangers. In different phrases, we do not simply want guardrails for top threat AI. We additionally want an off swap.

If this story has raised considerations or you must speak to somebody, please seek the advice of this checklist to discover a 24/7 disaster hotline in your nation, and attain out for assist.![]()

Henry Fraser, Analysis Fellow in Regulation, Accountability and Knowledge Science, Queensland College of Expertise

This text is republished from The Dialog underneath a Artistic Commons license. Learn the authentic article.