AI Chatbots Have Totally Infiltrated Scientific Publishing

One % of scientific articles printed in 2023 confirmed indicators of generative AI’s potential involvement, in accordance with a latest evaluation

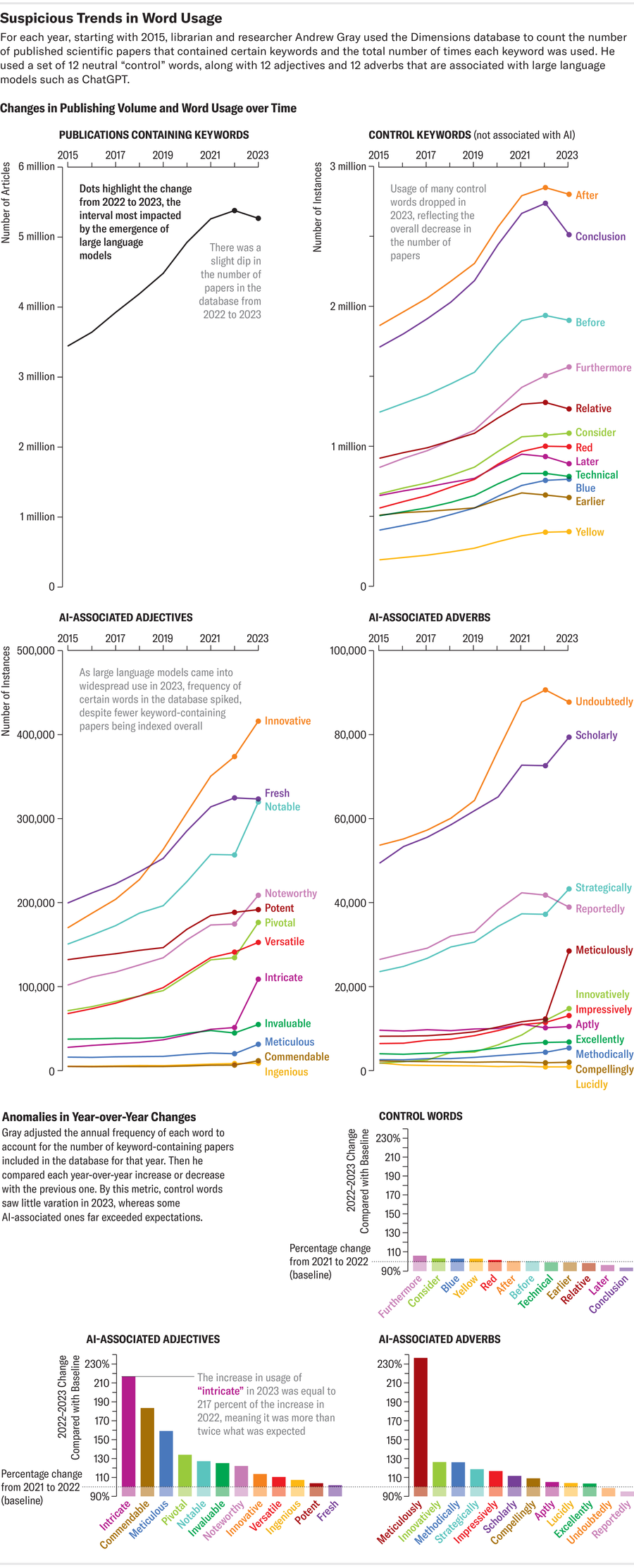

Amanda Montañez; Supply: Andrew Grey

Researchers are misusing ChatGPT and different synthetic intelligence chatbots to provide scientific literature. A minimum of, that’s a brand new concern that some scientists have raised, citing a stark rise in suspicious AI shibboleths displaying up in printed papers.

A few of these tells—such because the inadvertent inclusion of “certainly, here is a possible introduction for your topic” in a latest paper in Surfaces and Interfaces, a journal printed by Elsevier—are fairly apparent proof {that a} scientist used an AI chatbot referred to as a big language mannequin (LLM). However “that’s probably only the tip of the iceberg,” says scientific integrity advisor Elisabeth Bik. (A consultant of Elsevier informed Scientific American that the writer regrets the scenario and is investigating the way it might have “slipped through” the manuscript analysis course of.) In most different circumstances AI involvement isn’t as clear-cut, and automatic AI textual content detectors are unreliable instruments for analyzing a paper.

Researchers from a number of fields have, nonetheless, recognized a number of key phrases and phrases (equivalent to “complex and multifaceted”) that have a tendency to seem extra typically in AI-generated sentences than in typical human writing. “When you’ve looked at this stuff long enough, you get a feel for the style,” says Andrew Grey, a librarian and researcher at College School London.

On supporting science journalism

For those who’re having fun with this text, contemplate supporting our award-winning journalism by subscribing. By buying a subscription you’re serving to to make sure the way forward for impactful tales concerning the discoveries and concepts shaping our world as we speak.

LLMs are designed to generate textual content—however what they produce could or might not be factually correct. “The problem is that these tools are not good enough yet to trust,” Bik says. They succumb to what laptop scientists name hallucination: merely put, they make stuff up. “Specifically, for scientific papers,” Bik notes, an AI “will generate citation references that don’t exist.” So if scientists place an excessive amount of confidence in LLMs, research authors danger inserting AI-fabricated flaws into their work, mixing extra potential for error into the already messy actuality of scientific publishing.

Grey just lately hunted for AI buzzwords in scientific papers utilizing Dimensions, a knowledge analytics platform that its builders say tracks greater than 140 million papers worldwide. He looked for phrases disproportionately utilized by chatbots, equivalent to “intricate,” “meticulous” and “commendable.” These indicator phrases, he says, give a greater sense of the issue’s scale than any “giveaway” AI phrase a slipshod writer may copy right into a paper. A minimum of 60,000 papers—barely greater than 1 % of all scientific articles printed globally final yr—could have used an LLM, in accordance with Grey’s evaluation, which was launched on the preprint server arXiv.org and has but to be peer-reviewed. Different research that centered particularly on subsections of science recommend much more reliance on LLMs. One such investigation discovered that as much as 17.5 % of latest laptop science papers exhibit indicators of AI writing.

Amanda Montañez; Supply: Andrew Grey

These findings are supported by Scientific American’s personal search utilizing Dimensions and a number of other different scientific publication databases, together with Google Scholar, Scopus, PubMed, OpenAlex and Web Archive Scholar. This search regarded for indicators that may recommend an LLM was concerned within the manufacturing of textual content for educational papers—measured by the prevalence of phrases that ChatGPT and different AI fashions usually append, equivalent to “as of my last knowledge update.” In 2020 that phrase appeared solely as soon as in outcomes tracked by 4 of the foremost paper analytics platforms used within the investigation. However it appeared 136 instances in 2022. There have been some limitations to this method, although: It couldn’t filter out papers that may have represented research of AI fashions themselves moderately than AI-generated content material. And these databases embody materials past peer-reviewed articles in scientific journals.

Like Grey’s method, this search additionally turned up subtler traces which will have pointed towards an LLM: it regarded on the variety of instances inventory phrases or phrases most popular by ChatGPT have been discovered within the scientific literature and tracked whether or not their prevalence was notably completely different within the years simply earlier than the November 2022 launch of OpenAI’s chatbot (going again to 2020). The findings recommend one thing has modified within the lexicon of scientific writing—a improvement that is likely to be brought on by the writing tics of more and more current chatbots. “There’s some evidence of some words changing steadily over time” as language usually evolves, Grey says. “But there’s this question of how much of this is long-term natural change of language and how much is something different.”

Signs of ChatGPT

For indicators that AI could also be concerned in paper manufacturing or modifying, Scientific American’s search delved into the phrase “delve”—which, as some casual displays of AI-made textual content have identified, has seen an uncommon spike in use throughout academia. An evaluation of its use throughout the 37 million or so citations and paper abstracts in life sciences and biomedicine contained inside the PubMed catalog highlighted how a lot the phrase is in vogue. Up from 349 makes use of in 2020, “delve” appeared 2,847 instances in 2023 and has already cropped up 2,630 instances to date in 2024—a 654 % enhance. Related however much less pronounced will increase have been seen within the Scopus database, which covers a wider vary of sciences, and in Dimensions information.

Different phrases flagged by these displays as AI-generated catchwords have seen comparable rises, in accordance with the Scientific American evaluation: “commendable” appeared 240 instances in papers tracked by Scopus and 10,977 instances in papers tracked by Dimensions in 2020. These numbers spiked to 829 (a 245 % enhance) and 20,536 (an 87 % enhance), respectively, in 2023. And in a maybe ironic twist for would-be “meticulous” analysis, that phrase doubled on Scopus between 2020 and 2023.

Extra Than Mere Phrases

In a world the place lecturers reside by the mantra “publish or perish,” it’s unsurprising that some are utilizing chatbots to avoid wasting time or to bolster their command of English in a sector the place it’s typically required for publication—and could also be a author’s second or third language. However using AI expertise as a grammar or syntax helper might be a slippery slope to misapplying it in different components of the scientific course of. Writing a paper with an LLM co-author, the concern goes, could result in key figures generated complete material by AI or to see opinions which might be outsourced to automated evaluators.

These are usually not purely hypothetical situations. AI definitely has been used to provide scientific diagrams and illustrations which have typically been included in tutorial papers—together with, notably, one bizarrely endowed rodent—and even to substitute human individuals in experiments. And using AI chatbots could have permeated the peer-review course of itself, primarily based on a preprint research of the language in suggestions given to scientists who introduced analysis at conferences on AI in 2023 and 2024. If AI-generated judgments creep into tutorial papers alongside AI textual content, that considerations specialists, together with Matt Hodgkinson, a council member of the Committee on Publication Ethics, a U.Ok.-based nonprofit group that promotes moral tutorial analysis practices. Chatbots are “not good at doing analysis,” he says, “and that’s where the real danger lies.”